Transformer architecture:

is a neural network architecture that’s quite different from traditional RNN, CNN, LSTM owing to its Attention Mechanism and parallel processing capabilities (see ref).

“In this work we propose the Transformer, a model architecture eschewing recurrence and instead relying entirely on an attention mechanism to draw global dependencies between input and output. The Transformer allows for significantly more parallelization and can reach a new state of the art in translation quality after being trained for as little as twelve hours on eight P100 GPUs.” from Attention Is All You Need, 2017.

In traditional architectures the data is processed step by step, piece by piece while Transformers process and analyze holistically, looking for meaningful parts in combined or separated data pieces. In the above quoted article, which actually is the initial article published by Google researchers in 2017 is describes the essence of the RNNs vs the new Transformer architecture. To give some context for those who does not know about the traditional or previous neural networks, here are definitions of the commonly used ones, they all have their strengths while Transformer is the superior in terms of memory budget constraints.

RNN: Recurrent Neural Networks sequentially process the data, one data piece at a time. It cannot process or analyze the data in a holistic way like a transformer.

CNN: Convolutional Neural Networks, process data in a grid like structure, are used mostly in image analysis and process data one pixel at a time.

LSTM: Long-short term memory networks is the closest to the transformer architecture with its ability to learn and retain long-term memory, however LTSM is not capable of analyzing data in the holistic way a transformer architecture can.

After Transformer Architecture came to life, it replaced all the above. It is mainly used for translations and text generation. Transformer Architecture has an attention mechanism which disassociates it from other models.

The Transformer architecture properties:

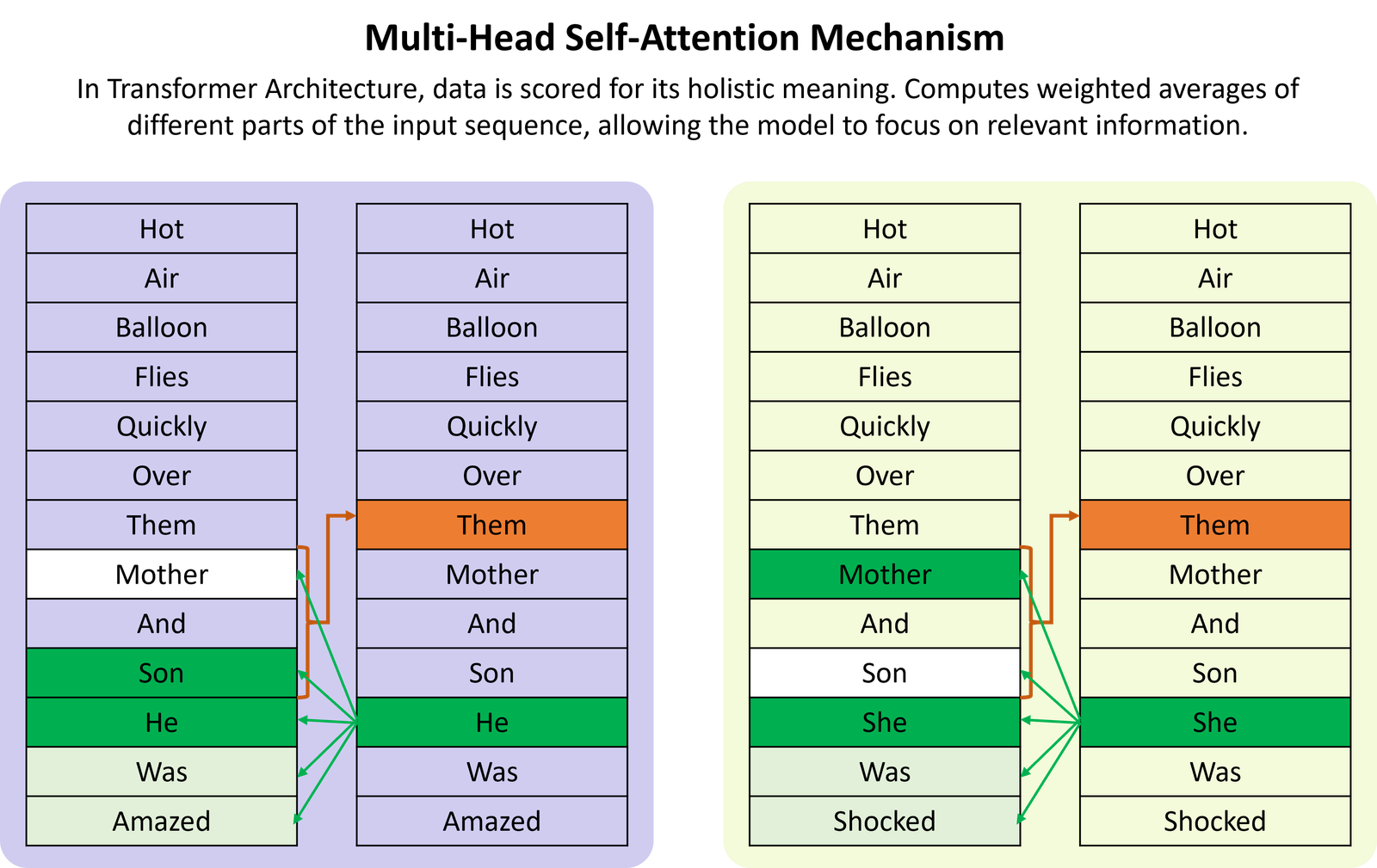

- Attention Mechanism: computes weighted averages of the input, builds/understands relationships by scoring the content.

- Parallel Processing: Owing to the attention mechanism Transformer architecture can process data in parallel. This increases efficiency and helps in handling of large-scale data, enables deep learning process.

- Long-Range Dependencies: Self-attention allows holistic understanding of data by considering all positions simultaneously.

- Scalability: Large sequences and datasets are easily handled by transformer architecture, while they are mostly used for texts translation and contextual understanding of texts they can be used in images and videos as well.

Transformer Architecture Components:

Input Sequence:

-

- The input sequence consists of tokens. (In case of text: words)

Embedding Layer:

-

- The embedding layer converts discrete tokens into vectors capturing semantic information.

Positional Encoding:

-

- Unique representations for each position in the sequence is encoded for the tokens.

Encoder Layers:

-

- The encoder consists of typically 6 layers with each layer having 2 sublayers.

- Each layer contains two subcomponents:

- Multi-Head Self-Attention Mechanism: Computes weighted averages of different parts of the input sequence, allowing the model to focus on relevant information.

- Feed-Forward Network: Captures complex relationships between tokens.

- Encoded Sequence: Continuous representations capture both token semantics and positional information.

Output Sequence:

The embedding

Embedding Layer:

-

- The embedding layer converts discrete tokens into vectors capturing semantic information.

Positional Encoding:

-

- Unique representations for each position in the sequence is encoded for the tokens.

Decoder Layers:

-

- Decoder consists of multiple layers like the encoder layer, data is processed in each layer. Like encoder layer Feed-Forward Network is present. Encoders and decoder layers work together to make the outcome meaningful for the human. Thats the strength of the transformer architecture which can create texts that are of the human created quality.

SoftMax +Linear:

- A sentence for example can be translated in multiple ways, SoftMax and linear statistically helps select the most meaningful message.